DaVinci RL Needle Orientation Task

Motivation

In real surgical settings, tools are often not presented in ideal orientations. Minor misalignments can significantly increase task difficulty or risk. Correcting tool orientation is therefore a fundamental skill for autonomous or semi-autonomous surgical systems.

From a learning perspective, orientation correction is challenging because:

The task is highly sensitive to contact geometry

Orientation error is nonlinear and discontinuous

Naive rewards encourage unstable or overly aggressive motion

This project addresses these challenges through structured task design and phase-aware learning, rather than relying on a single dense reward.

Overview

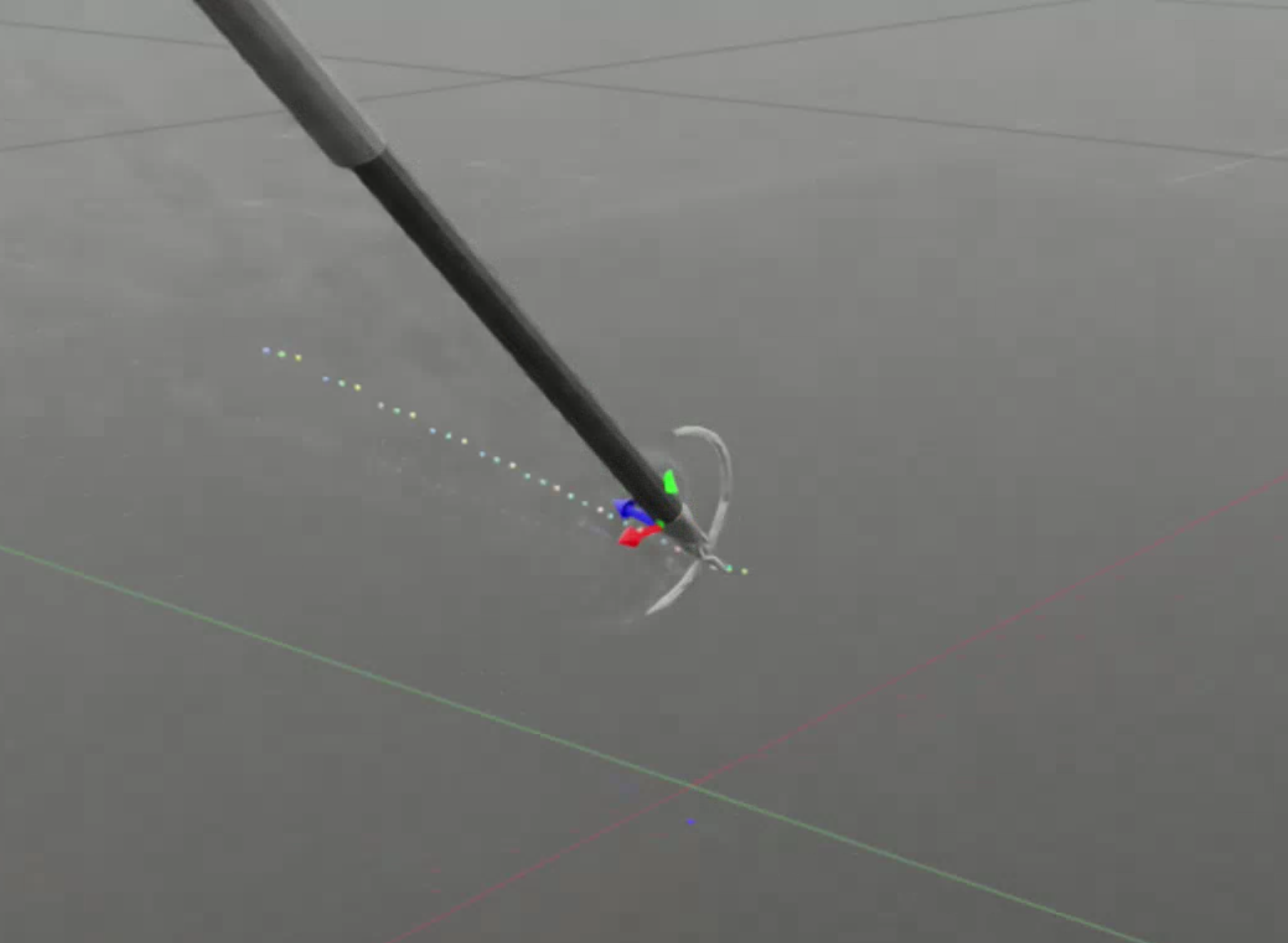

This project focuses on training a reinforcement learning policy to autonomously correct the orientation of a surgical needle using a robotic manipulator. The needle is initially misaligned by up to ±30 degrees and must be reoriented so that its sharp edge points upward, a prerequisite for safe and precise surgical manipulation.

The task is implemented in Isaac Lab with Orbit Surgical, emphasizing contact-rich manipulation, orientation control, and robust learning under geometric constraints. Rather than relying on hard-coded trajectories, the robot learns a sequence of behaviors—approach, contact, and correction—through a staged reinforcement learning framework.

Task Setup & Environment Design

The environment consists of:

One active robotic arm (dual-gripper surgical manipulator)

A surgical needle fixed via a joint, allowing rotation about a defined axis

No second robot holding the needle (removed for simplicity and stability)

The needle’s initial orientation is randomized within ±30° around its rotation axis at reset. By fixing the needle with a joint rather than a second robot, the environment:

Reduces unnecessary dynamics and failure modes

Improves training stability

Isolates the learning problem to intentional interaction, not inter-robot coordination

This design choice makes the task both cleaner and more representative of constrained surgical fixtures.

Learning & Control

The needle orientation task is trained using a structured reinforcement learning framework that combines phased task decomposition, task-aware observations and actions, and stable policy optimization. Rather than relying on a single dense reward, the learning problem is explicitly organized around the physical stages required to correct the needle’s orientation.

Phased task structure

The task is decomposed into sequential phases that mirror meaningful interaction steps:

Reach – move the gripper tips toward the needle without contact

Assess orientation – determine whether the needle is misaligned beyond tolerance

Contact – establish controlled contact at a valid interaction point

Correct – nudge the needle to reduce orientation error within the ±30° range

Progression is tracked using internal phase flags, and rewards are issued only upon successful phase transitions. This prevents reward hacking, reduces instability from dense shaping, and encourages deliberate, interpretable behavior.

Guided path generation

During the correction phase, the robot does not learn arbitrary free-space motions. Instead, a task-aware path generator provides a structured reference for how the needle should be reoriented. This path is defined relative to the needle’s rotational joint, producing a smooth, constrained arc that respects the underlying geometry of the task.

The reinforcement learning policy learns to:

Follow this reference path through contact

Regulate interaction timing and direction

Correct orientation without destabilizing the needle

This hybrid approach—path guidance plus learning—reduces exploration burden, improves training stability, and leads to smoother corrective motions compared to purely unconstrained RL.

Observations and actions

The policy observes a compact, task-relevant state that includes:

Gripper tip positions and orientations

Needle orientation relative to the target frame

Proximity and contact-related signals

Current phase indicators

Actions are continuous joint-level commands controlling arm motion and gripper behavior. Importantly, learning is grounded in local interaction geometry—the policy reasons about how and where to make contact, not just where the end effector should go.

Learning algorithm

Training is performed using Proximal Policy Optimization (PPO) within Isaac Lab’s manager-based environment and RSL-RL integration. PPO is chosen for its stability in continuous control tasks and its robustness to noisy contact dynamics, which are unavoidable in manipulation problems.

The reward function focuses purely on task progression and success, prioritizing simplicity and interpretability. While constraint- or cost-based formulations (e.g., penalizing unsafe contact or excessive force) are a viable alternative and were considered, they introduce additional hyperparameters and tuning complexity. For this task, phased rewards alone were sufficient to produce stable and physically reasonable behavior.

Resulting behavior

This learning structure leads to emergent behaviors that resemble human tool handling:

Conservative approach before contact

Small, precise corrective motions rather than aggressive pushes

Exploitation of the needle’s rotational joint constraint instead of fighting it

The result is a policy that reliably corrects needle orientation while remaining smooth, stable, and interpretable.

Video Demonstration of the Nudging Process

This project is still ongoing, more information will be released when it is complete.