Cable-Driven Robotic Arm with Tension-Aware Reinforcement Learning Algorithm

Introduction

Cable-driven robotic systems offer a compelling alternative to traditional transmissions such as gears and belts. By relocating motors away from moving joints, cable actuation enables low inertia, high back-drivability, and zero backlash, properties that are especially valuable for lightweight, dexterous robotic manipulation. Despite these advantages, cable-driven robots remain uncommon in practice due to one major limitation: poor durability caused by excessive and uneven cable tension, which accelerates fatigue, creep, and maintenance demands .

This project explores how control intelligence, rather than hardware alone, can be leveraged to extend the operational lifespan of cable-driven robots. We introduce a Cable-Aware Reinforcement Learning (CARL) framework that explicitly incorporates cable tension into the control loop. Instead of merely tracking a desired trajectory, the controller learns to distribute motion across joints in a way that minimizes cable tension while maintaining positional accuracy, even in the presence of nonlinear effects such as friction, compliance, and cable stretch.

I am mainly responsible for the software and control side of this project, including simulation, reinforcement learning, and control integration. However, for clarity and completeness, the hardware design and mechanical considerations are also described.

Hardware & Components

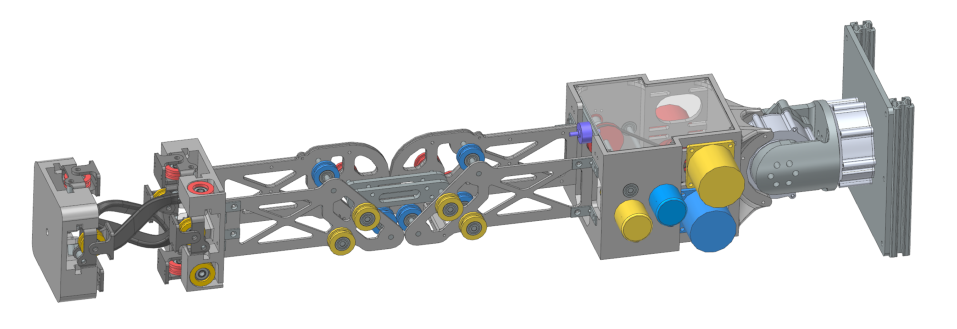

To support tension-aware control, a custom cable-driven robotic arm was designed with simplicity, durability, and measurement fidelity as primary goals. The arm features a lightweight, low-inertia architecture where motors are placed away from distal joints and motion is transmitted through steel cables routed over minimal pulley paths.

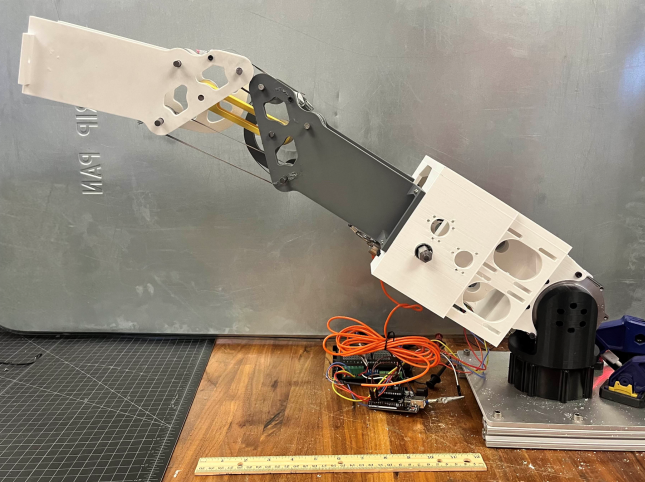

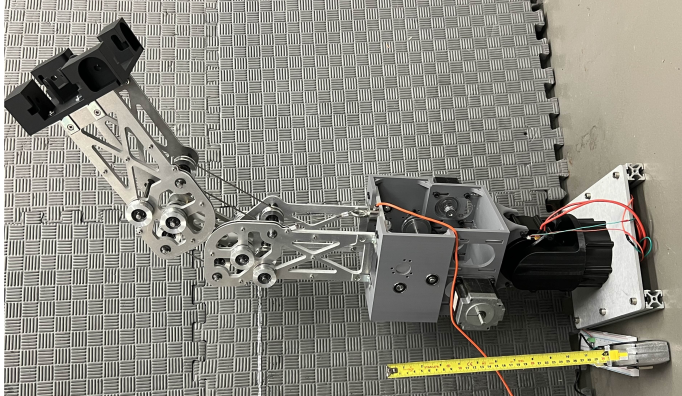

Early prototypes used 3D-printed linkages to enable rapid iteration, but testing revealed plastic deformation under cable tension. The final design replaces critical load-bearing components with waterjet-cut aluminum linkages, significantly improving stiffness and repeatability while preserving the weight advantages of cable-driven transmission. Steel shafts with machined grooves and retaining rings constrain pulley motion and reduce misalignment, further improving mechanical reliability.

Cable routing was intentionally kept minimal to reduce sharp bends, frictional losses, and fatigue. Crucially, the elbow actuation cable is terminated on a load cell, providing real-time tension measurements used both for experimental validation and as feedback to the learning-based controller .

Electrically, the system combines stepper motors for cable actuation and brushless DC motors for base motion, coordinated through microcontrollers that interface with the learned policy. This hardware configuration enables precise tracking while exposing internal cable forces—making it possible to directly study how control strategies influence cable longevity.

CAD Design

Final Design

3D-Printed Prototype

Simulation

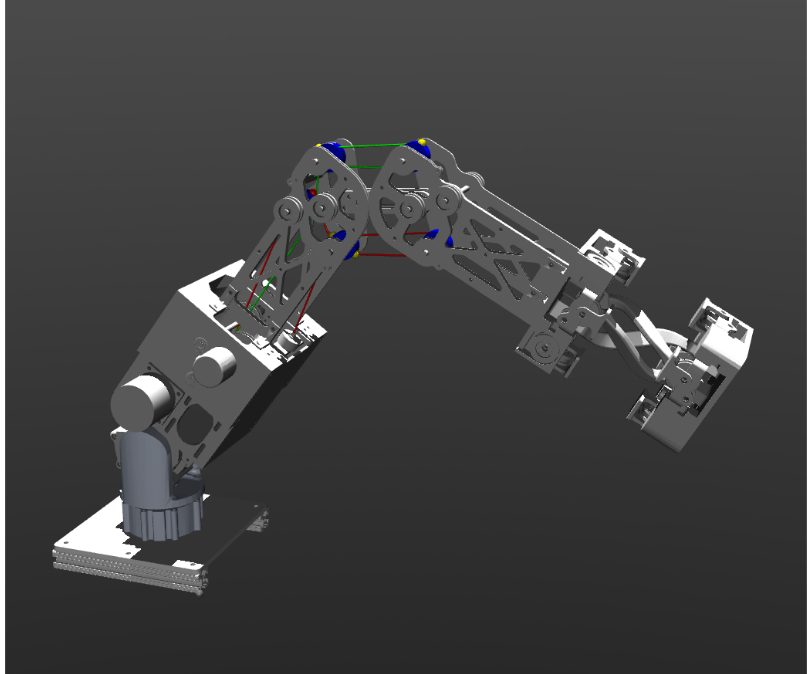

To train and evaluate the tension-aware controller, we built a physics-based simulation of the cable-driven arm in MuJoCo, chosen for its strong support of tendon modeling and reliable contact/rigid-body dynamics . The robot model was created by exporting the SolidWorks assembly to URDF (via a third-party tool) and then manually converting the URDF into MuJoCo’s XML/MJCF format due to the lack of a direct CAD-to-MuJoCo exporter.

In simulation, the elbow actuation is implemented using tendons that vary tendon length to drive joint motion. This differs from the physical arm, where elbow motion comes from cable wrapping/unwrapping around a shaft. That simplification avoids modeling many individual pulleys (each with unknown stiffness/damping parameters that would require extensive tuning), while still capturing the behavior most relevant to our goal: comparing tension between controllers once the arm settles at a commanded target.

To keep the simulated arm consistent with hardware kinematics, tendon–joint relationships and rolling-surface joints were enforced using equality constraints in the MuJoCo model. Simulation validity was checked by comparing normalized cable tension against measurements from the real arm: the model aligns well at the end of actions, even though it does not fully reproduce the initial transient effects caused by real cable winding and nonlinear routing.

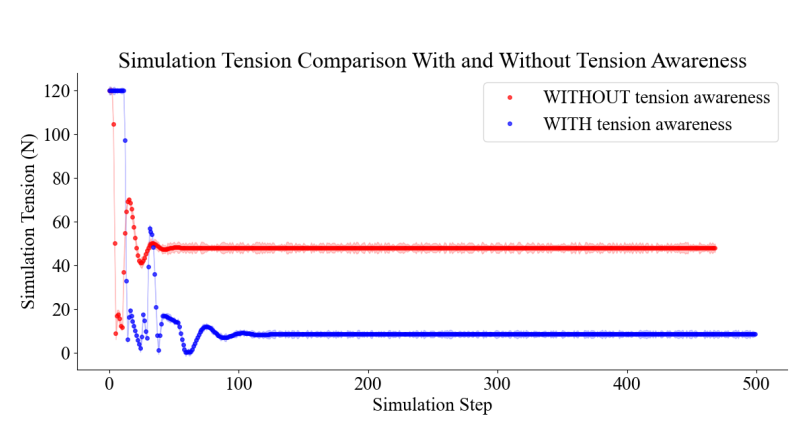

This simulation environment enabled reinforcement learning training and controlled ablation studies (tension-aware vs baseline) before moving toward full sim-to-real deployment.

Robotic Arm in MuJoCo Simulation Environment

Learning & Control

The core of this project is CARL (Cable-Aware Reinforcement Learning): a control framework that learns motions which track a desired end-effector trajectory while actively minimizing cable tension, reducing long-term wear from creep and fatigue.

Formulating the task for RL

We model the control problem as a Markov Decision Process (MDP). The policy observes a state that includes the arm’s task-relevant motion and internal loading—specifically end-effector pose, tracking error, and measured/simulated cable tension. Actions correspond to continuous motor commands that drive the joints.

Why PPO

We train the controller using Proximal Policy Optimization (PPO) (actor–critic), selected for its stability in continuous control and noisy dynamics. PPO’s clipped policy updates help prevent unstable jumps during training, which is important when the reward balances competing objectives (accuracy vs tension reduction).

Reward design: accuracy + longevity

To avoid “good tracking but damaging tension,” the reward is split into two main components:

Tracking performance (stay close to the target trajectory)

Tension awareness (penalize high tension and penalize rapid tension changes to encourage smoother loading)

In practice, tension awareness is implemented in two ways: tension is part of the observation, and the reward penalizes both tension magnitude and squared changes in tension.

What the policy learns

A key emergent behavior is that the tension-aware policy learns to reduce motion at the elbow (where cable tension is most sensitive) and instead relies more on other joints to achieve the same end-effector movement. In contrast, a baseline controller without tension awareness actuates the elbow more aggressively, producing higher tension.

Results

Compared to the baseline, the tension-aware PPO policy achieves an 82% reduction in cable tension (48 N → 8.6 N). Using fatigue-life scaling (Basquin-style relationship), this corresponds to a ~974× theoretical increase in cable fatigue life, while still maintaining acceptable tracking accuracy.

Comparison between with and without tension awareness

Video demonstration of simulation moving to a point